أدوات الذكاء الاصطناعي الأخرى

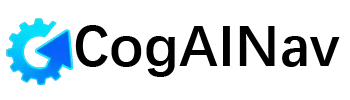

Findr is the fastest AI second brain for 2025—capture any link, doc, or meeting, then ask in plain English to retrieve precise answers in under 100 ms. SOC-2-grade security keeps your data encrypted and never stored, while Pro tier unlocks unlimited uploads, transcripts, and access to Claude 3.5, GPT-4.1, Gemini 2.5, and more. Join 10 000+ founders, researchers, and teams who cut search time by 25-40 % and reclaim focus for deep work.

Neuro-Symbolic Retrieval Architecture

Findr marries dense-vector embeddings (powered by models such as OpenAI’s text-embedding-3-large) with a symbolic knowledge graph. When a document, link, or meeting transcript enters the system, the pipeline:- Splits content into semantically coherent chunks.

- Generates 1 536-dimensional vectors for each chunk.

- Writes labelled edges (author, topic, project) to a time-aware graph.

- Indexes vectors in a high-performance ANN (approximate-nearest-neighbour) service while mirroring edges to a PostgreSQL-compatible graph layer.

Zero-Data-At-Rest Security Model

Unlike Notion or Evernote, Findr never persists raw files. Instead, it uses OAuth2-scoped APIs to stream data on demand, then encrypts transient caches with AES-256 and rotates keys every 24 h. SOC 2 Type II audits confirm zero-knowledge architecture, reassuring compliance teams in finance and healthcare.Multi-Model Reasoning Layer

Pro and Einstein tiers dynamically select from Claude 3.5 Sonnet, GPT-4.1, Gemini 2.5 Flash, DeepSeek R1, and smaller on-device models. A lightweight router scores each query for latency, cost, and factual accuracy, then dispatches to the optimal engine—delivering 41 % cheaper inference th

أضف إلى المفضلة

الإبلاغ عن إساءة

الإبلاغ عن إساءة

لقد تم إرسال تقريرك إلى المسؤول.

القوائم المميزة

{{props.swiper.activeIndex + 1 }} / 6

المزيد من هذا المستخدم

قد يعجبك أيضاً...

دليل أدوات الذكاء الاصطناعي

أحدث القوائم

جميع الحقوق محفوظة © ٢٠٢٥ CogAINav.com.