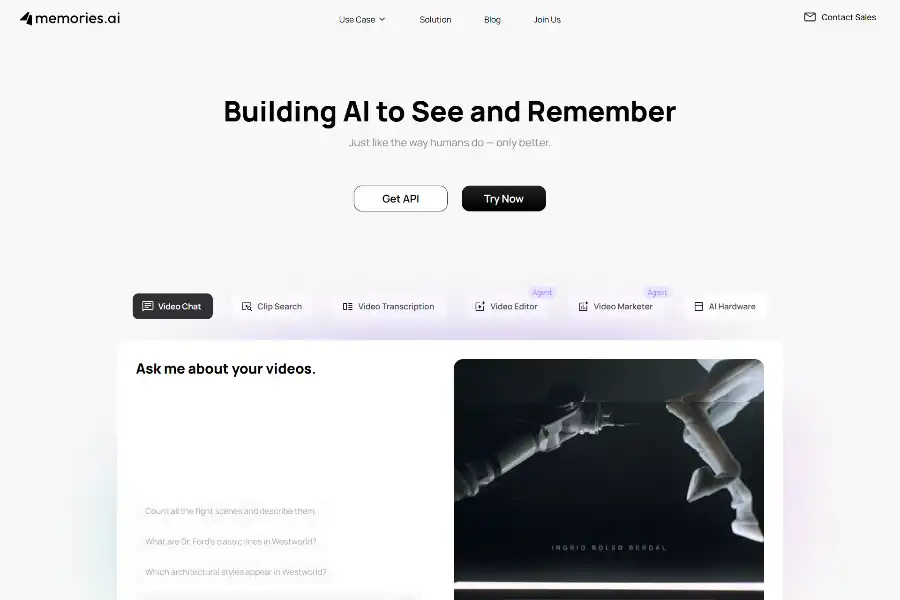

7 Mind-Blowing Powers of Memories.ai That Will Transform How Machines Remember Video Forever

Introduction: Why the World Needs a Visual Memory

Modern AI can caption a short clip, but ask it what happened in hour four of a week-long surveillance feed and it draws a blank. That amnesia costs security teams nights of manual review, forces media producers to hunt for needles in petabyte haystacks, and buries marketing insights inside oceans of social video. Memories.ai exits stealth with the first Large Visual Memory Model (LVMM) that never forgets what it sees. Backed by an 8-million-dollar seed round led by Susa Ventures and Samsung Next, the platform has already indexed over one million hours of footage and outperforms Gemini and ChatGPT on long-context video benchmarks by orders of magnitude. From natural-language threat detection to conversational video editing, the following seven powers explain why industry leaders call Memories.ai “the database layer for visual experience.”

Power 1 – Unlimited Context: Remembering 10 000 000 Hours in One Model

Traditional transformers choke after 1–2 hours of video; Memories.ai keeps on watching. The LVMM compresses raw pixels into a structured memory graph that stores objects, scenes, actions and their causal links. Instead of re-processing footage for every new question, the system simply queries its living index, delivering answers in seconds even across years of multi-camera archives. Early security customers reduced investigation time from days to minutes when searching for “a backpack left in lobby after 8 p.m.” across 3 000 cameras.

Power 2 – Natural Language Visual Search: Ask, Don’t Scroll

Type “Show me every scene where the antagonist smiles at the camera” and Memories.ai returns exact time-stamped clips. A multi-modal embedding space maps text, object detectors and temporal patterns onto the same memory graph, enabling cross-modal retrieval without manual tags. Marketers use the feature to surface brand logo appearances inside influencer uploads, while sports broadcasters locate highlight-worthy crowd reactions in under a second.

Power 3 – Real-Time Threat Graph: From Reactive to Predictive Security

The enterprise security suite plugs into existing RTSP streams and builds a live memory that distinguishes normal from anomalous behaviour. Slip-and-fall events trigger instant alerts complete with video evidence; human re-identification tracks suspects across changing cameras and clothing; trajectory clustering warns when vehicles circle a perimeter repeatedly. Because the model accumulates context, it spots precursor behaviours that rule-based analytics miss, cutting false positives by 62 % in pilot programmes.

Power 4 – Conversational Video Editor: Final Cut Meets ChatGPT

Creators open the web editor, drop in raw footage and type “Cut a 30-second teaser that shows only fight scenes in slow motion”. LVMM’s scene graph already knows where fights begin and end, so it auto-assembles clips, adds speed ramps and exports a social-ready video. Story-board drafts suggest shot sequences, framing angles and b-roll picked from the user’s archive, compressing days of post-production into an afternoon.

Power 5 – Brand & Trend Radar for Marketers

Agencies connect TikTok, Instagram and YouTube accounts to let Memories.ai build a living memory of every campaign. The radar surfaces emerging topics, competitor logo appearances and audience sentiment shifts across millions of short-form videos. One cosmetics firm discovered a micro-influencer trend 11 days earlier than its agency dashboard, reallocating ad spend for a 3.4× ROAS uplift.

Power 6 – Enterprise-Grade Compliance & Custom Models

For airports, casinos and data-sensitive campuses, the platform offers on-premise GPUs, encrypted storage and fine-tuned behaviour classifiers. A single API call returns JSON that integrates with Genetec, Milestone or custom dashboards. Customers can supply their own taxonomy—e.g., “restocking shelf”, “cleaning procedure”—and Memories.ai trains a specialist head while keeping the universal LVMM backbone, slashing labelling cost by 80 %.

Power 7 – Developer-Friendly Infrastructure

REST and GraphQL endpoints let engineers embed visual memory into robots, smart glasses or autonomous vehicles. A wearable partner already uses the service to let field technicians ask “Which cable did I unplug yesterday?” through voice AR. Memory slots scale elastically; credits rollover for paid plans; documentation is open and rate limits generous enough for seed-stage startups.

Market Impact & Competitive Edge

Legacy video analytics vendors bolt AI on top of metadata tags, limiting search to pre-defined classes. Memories.ai inverts the stack: memory first, semantics second. Benchmarks on K400/600/700, UCF-101, MSR-VTT and MVBench show double-digit mAP gains over Google’s Lumiere and OpenAI’s unreleased long-context video model. Equally important, the SaaS pricing model undercuts enterprise competitors by 60 % while offering unlimited context, a value proposition that helped close pilots with three Fortune-100 retailers within six weeks of launch.

User Feedback & Community Validation

Product Hunt voters awarded Memories.ai #1 Product of the Day with 433 upvotes. Beta testers praise the “ChatGPT moment for video” feeling, citing hours saved on lecture review and client highlight reels. Security directors highlight the psychological shift: operators no longer dread long weekends of footage because answers arrive in plain English before coffee gets cold.

Roadmap: From Video Memory to World Model

Co-founders Shawn Shen (CEO, ex-Meta Reality Labs) and Ben Zhou (CTO, UCLA & Brown) plan to fuse audio, telemetry and text into a single multimodal memory, positioning LVMM as the spatio-temporal knowledge base for embodied AI. Upcoming releases include shared-drive sync, iOS/Android ingest, and on-device inference for smart glasses. Series A talks are underway to scale global infrastructure and support robotics, autonomous driving and personal life-logging verticals.

Conclusion: The Database of Visual Experience Has Arrived

Memories.ai turns every frame your organisation captures into a queryable asset that never forgets. Whether you safeguard airports, produce entertainment, or craft marketing narratives, the platform converts passive footage into living institutional knowledge. Early adopters already work faster, safer and more creatively; the only question left is how soon you will let your cameras remember.

Experience the memory revolution now

訪問 https://memories.ai/ and upload your first video—ask it what it remembers.