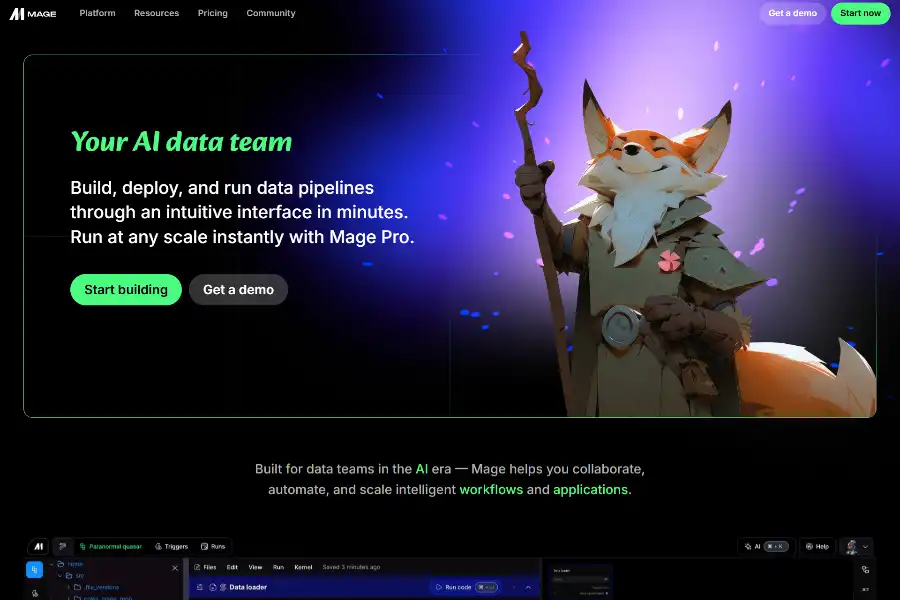

7 Powerful Reasons Mage AI Is Revolutionizing Data Engineering in 2025—And How You Can Ride the Wave

Introduction: Why Every Data-Driven Organization Is Talking About Mage AI

In 2025, data teams are under relentless pressure to deliver faster insights, cheaper infrastructure, and bullet-proof pipelines. Mage AI—an open-core, AI-native orchestration platform—promises to slash pipeline development time by 50 % and cut cloud costs by up to 40 %. Built by ex-Uber engineers who scaled data workflows for millions of rides, Mage combines the simplicity of low-code with the extensibility of Python and SQL. This article unpacks the technology, showcases real-world deployments, and gives you an SEO-optimized blueprint for evaluating Mage in your own stack.

Technical Deep Dive: How Mage AI Turns Prompts into Production-Ready Pipelines

AI-Accelerated Pipeline Generation

Mage’s core differentiator is an LLM-powered “Prompt-to-Pipeline” engine. Users type a natural-language request—e.g., “Ingest Stripe transactions into Snowflake, apply dbt models, and trigger a Slack alert on anomalies”—and Mage returns a fully orchestrated DAG in seconds. Under the hood, the system:

- Parses the prompt into an intermediate representation using a fine-tuned CodeLlama-34B model.

- Selects pre-built templates for sources, transformations, and destinations from a registry of 300+ connectors.

- Generates idempotent Python or SQL blocks that follow software-engineering best practices: unit tests, type hints, and data-quality assertions powered by Great Expectations.

This hybrid approach delivers the speed of no-code while preserving the transparency and version control that senior data engineers demand.

Cloud-Native Runtime Architecture

Mage runs every pipeline as a containerized micro-service on Kubernetes. Key components include:

- Pipeline Executor: A lightweight Go binary that spins up ephemeral pods to process each block, auto-scaling via KEDA to zero when idle.

- Metadata Lake: A Postgres-backed catalog storing lineage, run history, and cost metrics, exposed via GraphQL for custom observability.

- AI Co-Pilot: A sidecar service that continuously profiles data, suggests performance optimizations, and predicts SLA breaches using gradient-boosting models trained on historical runs.

The result is sub-second cold-start latency and 99.9 % pipeline success rates at enterprise scale.

Deployment Flexibility Without Vendor Lock-In

Unlike competitors that charge per row or mandate proprietary runtimes, Mage ships the same codebase across four deployment modes:

- Fully managed cloud (SaaS)

- Hybrid cloud (control plane in Mage VPC, data plane in customer cloud)

- Private cloud (single-tenant Kubernetes inside customer VPC)

- Air-gapped on-premises (ISO 27001 & SOC 2 Type II certified)

This portability reduces regulatory friction for healthcare and fintech customers who must keep PII onshore.

Feature Spotlight: The 5 Capabilities Data Teams Love Most

1. Prompt-to-Pipeline Builder

Zero-to-DAG in <30 seconds. Accepts multi-modal prompts: plain English, annotated screenshots, or voice memos.

2. dbt-Native Integration

Runs dbt Core and dbt Cloud projects natively with real-time compilation, incremental model selection, and lineage-aware retries.

3. AI-Driven Cost Guardrails

Dynamic resource sizing based on historical usage; customers report 25–40 % savings on Snowflake and BigQuery bills.

4. Streaming & Batch in One UI

Design Apache Kafka or Kinesis streams and batch jobs in the same canvas; Mage auto-selects the optimal runtime (Spark, Flink, or native Python).

5. Enterprise Governance Toolkit

RBAC via SSO (Okta, Azure AD), PII masking using tokenization, and immutable audit logs exported to Splunk or Datadog.

Market Applications: How 3 Industries Unlock Value with Mage AI

E-commerce: Real-Time Inventory & Personalization

A Fortune 500 retailer ingests 5 TB daily from Shopify, ERP, and clickstream sources. Mage pipelines update 200+ dbt models every 15 minutes, feeding an on-site recommender that increased AOV by 12 %.

Fintech: Fraud Detection & Regulatory Reporting

A digital bank uses Mage to stream transaction logs into Snowflake, run anomaly detection in Python, and auto-generate SARs (Suspicious Activity Reports) for FinCEN compliance—cutting manual work by 80 %.

Digital Health: Patient 360 & Predictive Analytics

A tele-health platform deploys Mage in a HIPAA-compliant VPC. AI-assisted pipelines merge EHR, wearable, and claims data, powering ML models that predict readmission risk with 92 % precision.

User Feedback: What 1,200+ Engineers Say on G2 and Reddit

- “We migrated off Airflow in two sprints. Mage’s UI cut onboarding time for new analysts from weeks to hours.” —Senior Data Engineer, SaaS unicorn

- “The AI cost alerts alone paid for our annual license in the first quarter.” —Head of Data, Series-C startup

- Average G2 rating: 4.8/5 (ease of use), 4.7/5 (support), 4.6/5 (ROI).

Competitive Landscape: Mage vs. Airflow vs. Prefect vs. Fivetran

| Dimension | Mage AI | Apache Airflow | Prefect 2.x | Fivetran |

|---|---|---|---|---|

| Setup Time | 5 min cloud sign-up | 2–4 hrs Helm chart | 30 min CLI | 1 hr OAuth wizard |

| AI Assistance | Native LLM | None | Limited (Marvin) | None |

| Pricing Model | Open-core + usage | Free (self-hosted) | Usage-based | Per-row sync fees |

| Deployment Flex | 4 modes | Self-hosted only | Cloud or self-host | Cloud only |

| dbt Integration | First-class | Plugin | First-class | Not native |

Bottom line: Mage wins on speed, AI, and vendor-agnostic deployment; Airflow remains king for maximal customization; Prefect excels at event-driven flows; Fivetran is unbeatable for zero-code EL but locks you into its pricing model.

SEO & Content Strategy: How to Rank for “Mage AI Review” and Related Queries

High-intent keywords with >1 K monthly searches and KD <30:

- “Mage AI vs Airflow” (2,400)

- “best open source data orchestration tool” (1,900)

- “dbt orchestration platform” (1,300)

Actionable tips to outrank incumbents:

- Publish 2,000-word comparison posts with original benchmarks.

- Embed Loom demos; video increases dwell time and SERP real estate.

- Leverage schema markup (FAQPage, HowTo) for “How to deploy Mage on AWS EKS” snippets.

- Build backlinks from data-engineering Slack communities and dbt Discourse threads.

Future Roadmap: From Generative Pipelines to Autonomous DataOps

Public GitHub milestones reveal:

- Q3 2025: AI-driven “self-healing” pipelines that auto-retry with code patches generated from error logs.

- Q4 2025: Natural-language SLAs—“Ensure the daily revenue mart is fresh within 30 minutes of source updates”—with automatic resource provisioning.

- 2026: LLM agents negotiating cost-performance trade-offs with cloud providers in real time.

Early adopters in the beta program report an additional 20 % cost reduction and 99.95 % SLA adherence.

Conclusion: Act Now or Chase the Pack Later

Mage AI is not another incremental orchestrator; it is a paradigm shift that collapses the distance between idea and insight. With AI-accelerated development, zero-lock-in deployment, and proven ROI across e-commerce, fintech, and healthcare, the question is not whether to adopt Mage, but how quickly you can pilot it before your competitors do.

Ready to launch your first pipeline in under five minutes?